In recent months, the world of AI image generation has become quite the conversation piece due to the viral spotlight cast on DALL-E Mini.

This AI tool allows users to input text prompts and receive nine visualizations of that prompt in under three minutes. In case you missed that, the DALL-E Mini isn’t sourcing images that relate to your text prompt – it’s creating original images for you.

Custom images in less than 180 seconds.

The DALL-E Mini announcement led to traffic surges and a sharing extravaganza of newly-created images. Quickly, many people became aware of the possibilities of Artificial Intelligence creating artwork.

What is DALL-E Mini?

Well, it is an artificial intelligence model that creates images based on unfiltered images from the internet and the guidance of the user’s text prompts. It initially borrowed the “DALL·E” part of its name from an OpenAi project by the same name, announced in January of 2021.

Later, OpenAi requested that the DALL-E Mini project change their name to make it clear that they were not associated. DALL-E Mini, now Craiyon, blew up with meme-like popularity across social media in mid summer (2022) and brought attention to projects of similar quality like DALL·E 2, Midjourney, DeepDream by Google, and many more, going from niche upcoming technology, to borderline household names.

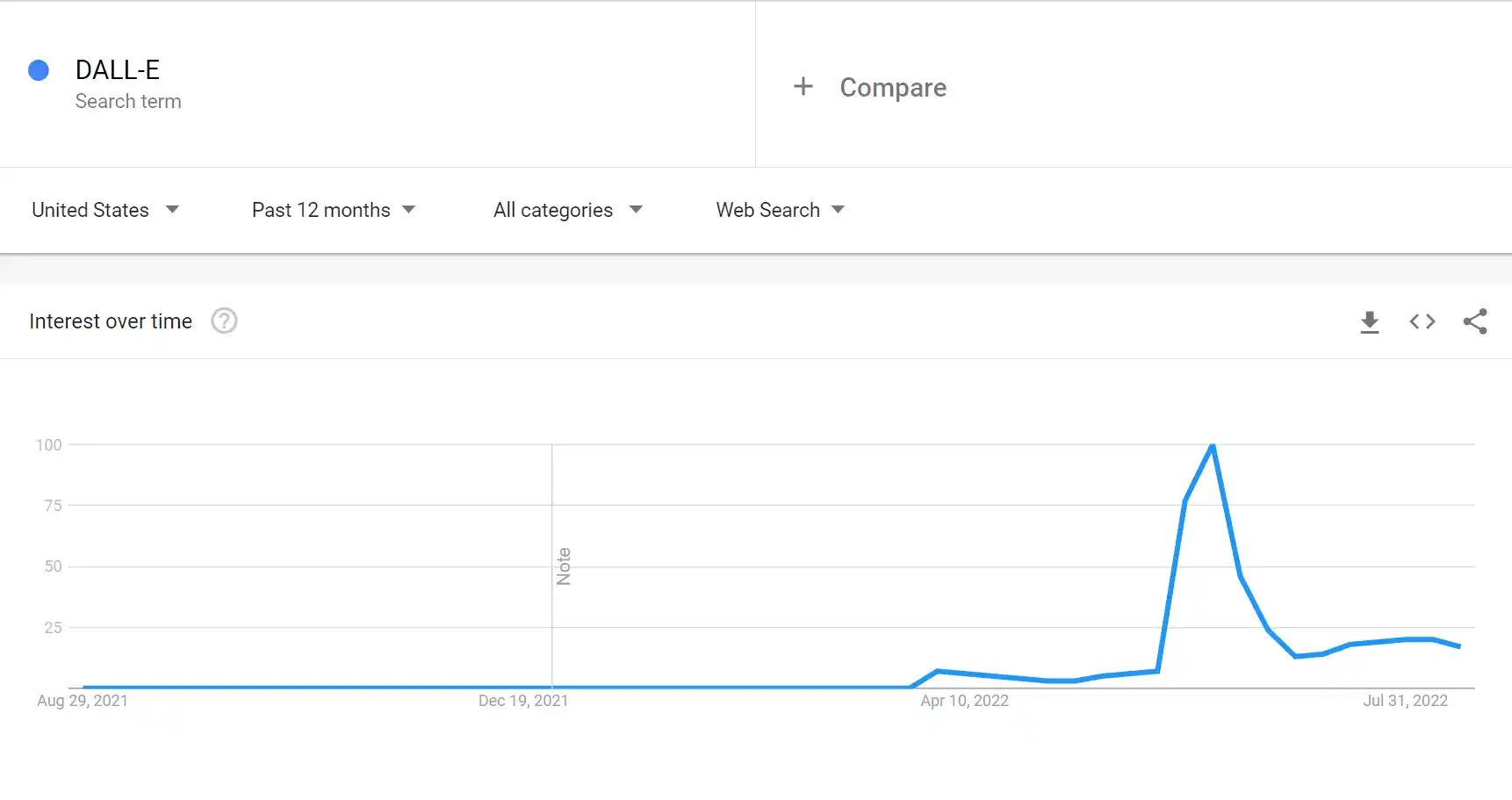

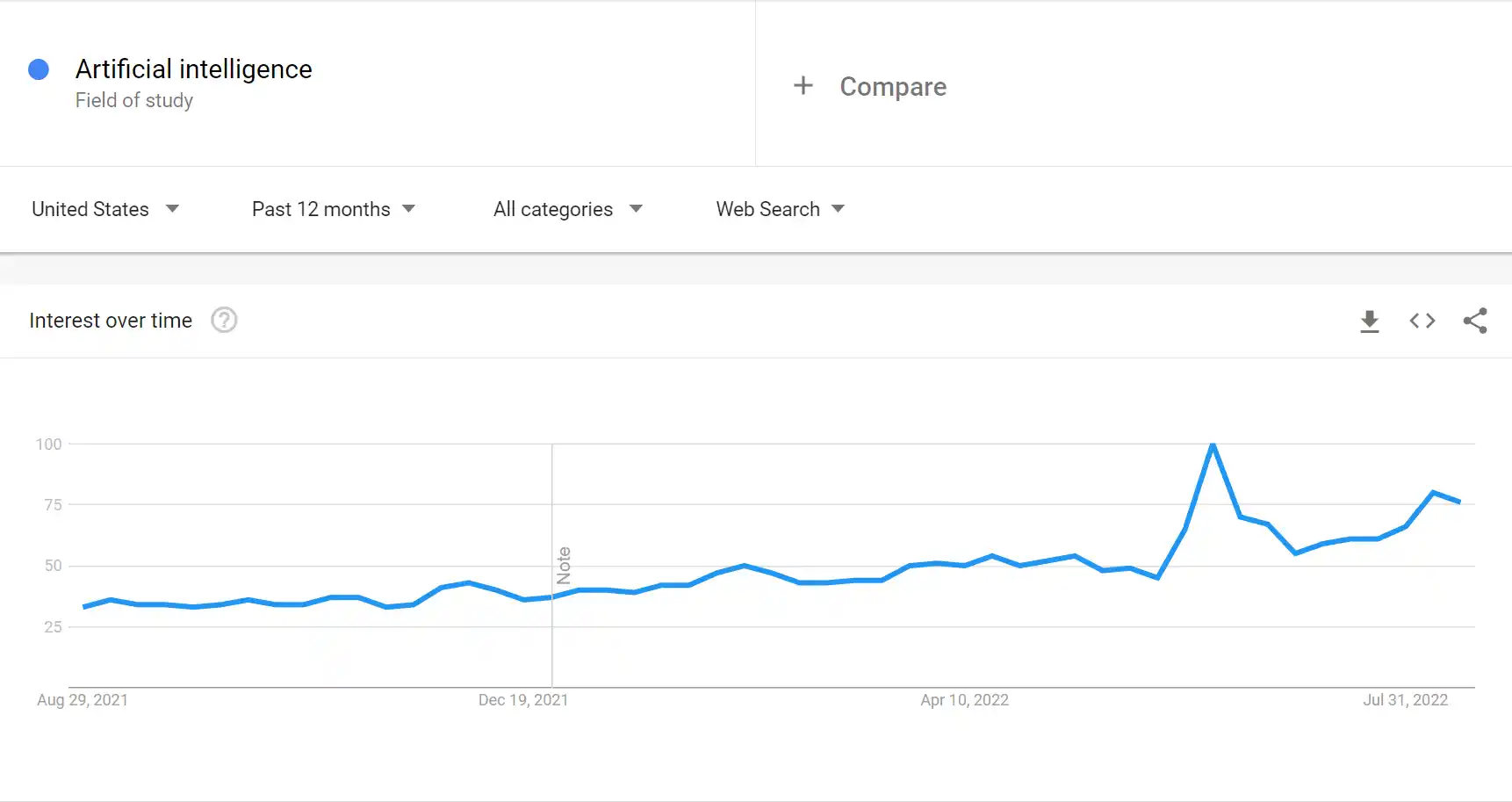

There was a strongly correlated rise of popularity between the search term “DALL-E” and “Artificial Intelligence” around the launch of DALL-E mini. The terms jumped from a Google Search term ranking of <1 all the way to 100 in a few short days, well after the initial announcement of DALL·E in January of 2021.

How does AI actually work?

For years, machine-learning algorithms have been able to take images and produce text that described what was in them, effectively creating automated image captions.

Training Data

The AI is trained on large, diverse datasets of images that have captions paired with them. Often these datasets are built using images scraped from across the internet, pulling images and their alt text to distinguish what’s in them.

Deep Learning

The AI then calculates a massive amount of metrics to define the qualities of images and describe the details in them.

For example, one image of a rubber duck may be yellow, while another might be pink, so the AI develops a metric for color, among many others.

Latent Space

The model determines coordinates in a multi-dimensional space based on the hundreds of metrics of what makes an image unique, allowing it to better define what the prompt is looking for.

The metrics could be the blue-ness or cloudy-ness of the sky in a given image, but the metrics it creates often are not of the same quality or simplicity that any human would define. This all happens on the platform-side and is invisible to the user.

Generation

This final step of the process involves composing pixels into something recognizable. There’s a great amount of randomness in this process, which ensures that you won’t ever get the same result twice.

Entering the same prompt into two AI models will often produce vastly different results. The primary reasons for these output variances are:

- Training dataset

- Metrics of the model

- Randomness of diffusion

“A Polka Dotted Elephant” DALL-E 2 vs Midjourney

Don’t panic! AI isn’t replacing humans anytime soon.

Although industry dependent, AI tools offer potentially game-changing opportunities and use cases. If you’re working in a creative field, these images could serve as everything from the actual final product, to inspiration for composition or the concept as a whole.

While some artists fear being replaced by AI, Creative Direction is still played by a human inputting the prompt into the model, which can be a tricky process that still requires refinement.

As the technology stands today, it’s not yet as simple as waving a magic wand. But for some creative applications, AI can provide a near-perfect output.

How will AI tools impact brand content creation?

Teams of all sizes struggle to support their desire to be a brand with impactful creative content, and the barrier for entry has dropped to the floor.

We’re not prone to melodrama but…

Are we watching the dawn of content creation in rapid succession with minimal production costs?

If so, this would indeed be a game changer for upstarts that need to create unique assets quickly to build a brand and complete long lists of specs for revenue-driving assets. With the right prompt, creative vision, and a little bit of design savvy to clean things up, AI solutions should fit neatly into the ecosystem of any small creative team today.

Here are a few ideas for how AI can be used:

- Concept artwork for fictional characters or scenes

- To replace stock images in advertising and websites

- Book covers and surrealist images

- Composition inspiration for a painting or any artwork

- High-detail, 3D-rendered styled images for conceptualizing products

- As a guide of what is cliche or to be avoided

- Concepts for architectural design or urban planning

Is DALL-E Mini free? What about other AI tools?

Here’s an answer you’ll love: It depends.

Here’s a quick rundown of some of the top platforms and what hoops need to be jumped through to play around with the AI models:

Crayon (formerly DALL-E mini)

This tool is completely free to use, but is likely the least useful in a professional sense. The images are low resolution and offer little beyond entertainment or inspiration.

Currently, there is a waitlist to access this tool. If you work in a creative field, you’re more likely to get priority acceptance. Once you’re accepted, you’ll start with 50 free credits and can purchase 115 credits for $15, if you need more.

There is a public Discord channel you can join and have about 20-25 images you can create for free using the bot and commands in chat. Payment plans start at $10 for about 200 images.

When you create your account, you’ll start with about seven images worth of “energy.” Their pricing model is interesting because the starting plan of $19 per month has a recharge rate of 12 energy per hour (sounds wacky, but yep, this is how they’re doing it).

Upside of their approach is that if you’re taking your time in between use, you’ll have more to work with.

What kind of results can you get from an AI prompt?

Let’s look at how to prompt on Midjourney, which you can try today for free on their Discord.

You begin by inputting a single line of text, but Midjourney also gives you the option of creating commands to add whole batches of keywords or linked images, and other specifications to your prompt.

As a source of inspiration on how to craft a compelling prompt, check out what other people are doing. When you’ve created an account through joining the discord, you’re then able to access their website. From there, you can view other users’ generated images, the prompt and any commands used to make them. This is especially helpful when learning how to prompt for specific styles and results.

Using a prompt like “infinite lush landscape” will produce four versions of what Midjourney thinks of that prompt within seconds. From there, you can request four new variations of one of the initial concepts, or get an upscaled version of one.

When viewing other users’ images, you can see all of the iterations they went through while refining their prompts, or guiding Midjourney toward their ideal result through these variations and upscaled images.

What goes into an AI prompt?

Using Midjourney again, let’s take a look at a more targeted prompt that explores an overall aesthetic.

Prompt: a modern ninja, crustpunk, samurai, tech wear, robot helmet, fire katana, atmospheric, photorealistic, unreal engine, rain

When you give this a try for yourself, you’ll see that you don’t always get exactly what you imagine when you’re crafting the prompt, but you’ll likely get extremely close.

The prompt above yielded detailed, interesting images that fit the world and aesthetic of the prompt we used, but failed at some of the specifics. Primarily, turning the “fire katana” into a pool of burning oil of some kind. Though it wasn’t what we were looking for, it sure is evocative and the overall image is usable if you’re comfortable with making final tweaks in Photoshop to land on the final result you envisioned.

Prompting is one of the most interesting opportunities for creatives to learn how to best use these tools to get dynamic and useful images.

Putting in a simple prompt with two or three words might yield exactly what you hoped for, but depending on the subject matter you may need to use more targeted prompts.

Crafting a prompt well is an art in and of itself.

Reactions to AI-generated image technology have been mixed

As with the birth of any new powerful tool, the response has varied.

Some have pointed out that this technology raises substantial ethical quandaries, many of which are being heatedly discussed by artists, brands, and everyday consumers alike.

For example, concept artists are losing work to beautiful landscapes created in seconds by an AI, even though that AI-produced image owes its existence to the countless artists it was inspired by, but does not provide name credit or royalties to.

These models are prone to bias.

The other main concern is the implicit bias of the data sets that the models are trained on. On balance, the internet has a western bias, and we don’t need to go into detail about some of the horrible things that exist in images on the internet.

AI creators are aware of these biases and the implications of some of the images that can be produced. Midjourney attempts to combat this issue by blacklisting specific words that would allow the generation of more pornographic images, while other developers have made efforts to prevent the models from generating images that could be used for political misinformation.

CNN reported that experts are “…concerned that the open-ended nature of these systems — which makes them adept at generating all kinds of images from words — and their ability to automate image-making means they could automate bias on a massive scale. They also have the potential to be used for nefarious purposes, such as spreading disinformation.”

The bias disclaimer on the Craiyon website reads:

While the capabilities of image generation models are impressive, they may also reinforce or exacerbate societal biases. Because the model was trained on unfiltered data from the Internet, it may generate images that contain harmful stereotypes. The extent and nature of the biases of the DALL·E mini model have yet to be fully documented. Work to analyze the nature and extent of these limitations is ongoing and being documented in more detail in the DALL·E mini model card.

For these reasons, some users have sworn off these tools wholesale, while others appear to be using them flippantly and not considering consequences for their industry or those that this technology may disproportionately affect.

Despite the moral questions, we do want our client-partners and colleagues to have an understanding of this technology so that you can make a well-informed decision about whether to use it.

Our recommendation: Pay attention to this technology

When there are shifts in any industry and new technologies are developed, people are often rightfully concerned that they might be replaced. This is not new. Everything from the industrial revolution to the advent of the internet has eliminated jobs, but often opened the door for new ones as well.

In the last year alone, AI technology has exploded not only in popularity but also in its ability to produce compelling, interesting and often beautiful images. It’s not long before this will grow into new spaces, create more opportunities, and exponentially increase iterative creative work.

There are absolutely valid concerns from creatives in the industry, along with the need for continually evolving best practices that will better account for the bias in data sets that are used for machine learning.

As AI artwork becomes more accessible and used by more brands, we’ll begin to see how much of a human element is still required in these processes. Ultimately, the AI is only being as “creative” as what is put into the system and is only able to create something as useful as the prompt that guides the result.